In most of the engineering career, we encounter some signals in the form of noise, disturbance, interference, etc. As an engineer in fact as control or communication engineer, we need to tackle these signals so they won’t cause a problem to our original system. But how to identify such signals the answer lies in the theory of probability, we classify such signals as random processes and they normally follow some distribution like Gaussian/Normal, Exponential, Uniform etc. Before jumping into this theory I would like you to understand some basic concept of Probability.

Some definitions of probability theory are given below:

Experiment: An experiment is a procedure or hypothetical procedure that produces some result. It is often denoted as E (don’t confuse it with Expectation) as an example we can say that the rolling of dice 3 times is an experiment.

Event: Set of outcomes from an experiment is called an event as an example the experiment defined above is the rolling of dice 3 times then we can say that the event A is a (3 times a six appears), B is (2 sixes and one 5 appears) etc.

Sample Space: Set of all possible outcomes of an experiment is called a sample space. Letter S is used to denote sample space.

Probability: The possible outcome that can occur from the given sample space or what is the chance of certain event occurring as an example the probability of occurring 1 in rolling a dice single time is 1/6 as there are 6 possible outcomes {1,2,3,4,5,6} and chances of dice being 1 is 1/6 or 16.66% .

Rules in Probability or Laws of Probability

Rule-1: There is no negative probability.

Rule-2: Probability always lies between 0 to 1.

Rule-3: Probability of sample space S is 1. As sample space consist of all possible events that can occur so the probability of all possible events should be 1, as an example we can say that the probability that if we toss a coin and we get both heads and tails then probability of both heads and tails out of their sample space which is (heads & tails) should be 1.

Rule-4: If we have two events A and B and A ∩ B = ∅ then Pr(A∪B) = P(A)+P(B). This means that the events A and B are mutually exclusive. On the other hand, if they are not mutually exclusive then Pr(A∪B) = P(A) + P(B) – P(A∩B).

Now you may be wondering what is mutually exclusive means. Mutually exclusive simply means that these two events have nothing in common it doesn’t mean that they span the whole sample space as well as an example I can say that the event A ={1,2} and B={3,4} are the outcomes of rolling a dice, it is clear that these two events are mutually exclusive events.

Rule-5: From Rule-4 if we have infinite number of mutually exclusive sets, [latex]A_i, \; i=1,2,3 \ldots \;, \; A_i \cap A_j = \phi \; \text{for all} \;\; i \neq j \;[/latex] ,

Then we can say that: [latex]Pr\left( \bigcup_{i=1}^{\infty}{A_i} \right) = \sum_{i=1}^{\infty} Pr(A_i)[/latex]

Rule-6: if we have [latex]Pr(A) = 0.3[/latex] then its complement [latex]Pr(\overset{-}{A}) = 1-Pr(A) = 1-0.3 = 0.7[/latex]

Most of the students complain that they don’t understand a single word what just happened and it is no doubt that probability is easy to understand. Some minds don’t get it all, as one scientist said that:

God may not play dice with the Universe, but we’re not Gods so we have to keep rolling the dice until we get it right.

Everything goes to your mind through some visual or hearing senses but Math is the only subject that goes to your brain by practising through hands. So we would like you to solve the following problems, don’t worry we will provide solutions as well. These will help you understanding the probability theory more clearly

Problem-1:

An experiment consists of rolling n (six-sided) dice and recording the sum of the n rolls. How many outcomes are there to this experiment?

Solution: Notice for n=1 die, there are 6 possible outcomes of the sum (1-6).

Now for n=2 dice, there are 11 possible outcomes of the sum (2-12).

Similarly for n=3 dice, you can obtain a sum from 3-18, which is 16 possible outcomes.

This leads to the general case for n dice, there are [latex]6n-n+1[/latex] cases. This is equivalent to [latex]5n+1[/latex].

Problem-2: An experiment consists of selecting a number x from the interval [0,1) and a number y

from the interval [0,2) to form a point (x,y).

- Describe the sample space of all points.

- What fraction of the points in the space satisfy x > y ?

- What fraction of points in the sample space satisfy x=y ?

Solution:

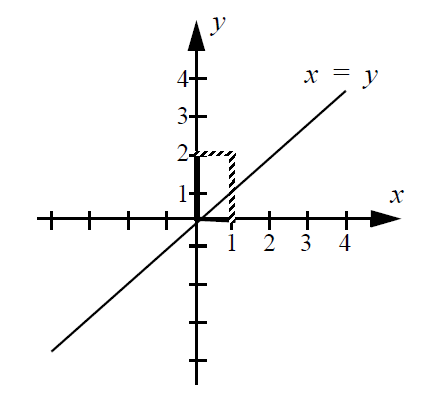

- Consider the x-y plane. The sample space will be all possible ordered pairs

where x is in [0,1) and y is in [0,2). This will look like a rectangle with thebottom left corner at the origin with a width of 1 and height of 2, as shown in

the figure . Note the lines x=1 and y=2 form the top and right side lines of

the rectangle and are not included in the sample space, so they are represented

with dashed lines. - On the x-y plane with the rectangle drawn from part (1), draw the line x=y.

The area below this line but still inside the rectangle will satisfy x > y. From

the graph, you will see this area is 1/4 of the total area. Thus, the fraction

of points in the space satisfying x > y is 1/4. - Zero, the line x=y has zero area and therefore the fraction of the area of the

line relative to the area of the rectangle is zero.

Now I would like to give some proofs related to the Rules of Probability

Problem-3: Prove Rule-5 which states that: For infinite number of mutually exclusive sets, [latex]A_i, \; i=1,2,3 \ldots \;, \; A_i \cap A_j = \phi \; \text{for all} \;\; i \neq j \;[/latex] ,

Following holds: [latex]Pr\left( \bigcup_{i=1}^{\infty}{A_i} \right) = \sum_{i=1}^{\infty} Pr(A_i)[/latex]

Solution: I will prove this by using mathematical induction. For the case M = 2 we have:

[latex]Pr\left(\bigcup_{i=1}^{2}A_{i}\right)=\sum_{i=1}^{2}Pr\left(A_{i}\right)[/latex]

This we know to be true from the axioms of the probability. Let us assume that the proposition is true for M=k.

[latex]Pr\left(\bigcup_{i=1}^{k}A_{i}\right)=\sum_{i=1}^{k}Pr\left(A_{i}\right)[/latex]

We need to prove that this is true for M=k+1. Define an auxiliary event B :

[latex]B=\left(\bigcup_{i=1}^{k}A_{i}\right).[/latex]

Then the event

[latex]\left(\bigcup_{i=1}^{k+1}A_{i}\right)=\left(B\cup A_{k+1}\right)[/latex]

[latex]Pr\left(\bigcup_{i=1}^{k+1}A_{i}\right)=Pr\left(B\cup A_{k+1}\right).[/latex]

Since the proposition is true for M=2 we can rewrite the above equation as

[latex]Pr\left(\bigcup_{i=1}^{k+1}A_{i}\right)=Pr\left(B\right)+Pr\left(A_{k+1}\right).[/latex]

Since the proposition is true for M=k we can rewrite this as:

[latex]\begin{eqnarray*}

& = & \sum_{i=1}^{k}Pr\left(A_{i}\right)+Pr\left(A_{k+1}\right)\\

& = & \sum_{i=1}^{k+1}Pr\left(A_{i}\right).

\end{eqnarray*}[/latex]

Hence the proposition is true for M=k+1. And by induction the proposition is true for all [latex]M[/latex]

These problems are adapted from the textbook: Probability and Random Process by Scott Miller 2ed

To see more proofs like this please visit this article.