We provide a brief introduction to machine learning in our article “Introduction to Machine Learning“. In this article, we will explore the machine learning pipeline, or what a machine learning system looks like.

Overview of the Machine Learning Pipeline

The machine learning pipeline explains the process of how a machine learning system operates. The pipeline comprises the following primary components:

- Real World: Data is usually acquired from the real world around us. Examples include temperature sensors measuring ambient air temperature and images of different species of cats and dogs.

- Data Acquisition: Data acquisition is the process of collecting data from the real or physical world. This can be done by humans or automated systems. For example, a camera on a street captures images of cars and pedestrians, or a researcher collects data for a clinical trial to observe the effects of a new drug. This is also called “Raw Data“.

- Data Cleaning & Structuring: Most of the data acquired through automated systems or even by humans requires cleaning and structuring. There can be many missing values, outliers (extreme cases), repeated data samples, and so on. The process of data cleaning involves fixing these data samples before using them for training purposes. Another aspect is to store and properly structure these data samples in a way that can be handled by the machine learning system.

- Machine Learning System: The main block of this entire pipeline is the machine learning system. Here, the machine/model is trained by a machine learning algorithm. After training, the model is fed testing data, and its output is then used for decision-making.

- Decision Making: The output of the machine learning system is used for decision-making. These decisions can be made by humans or automated systems. For instance, a stock predictor-based machine learning system might indicate that the price of a certain stock is going to rise. In high-frequency trading systems, automated systems make decisions and are responsible for buying/selling stocks.

Impact on the Real World

The decision-making system directly influences our real world. For instance, if a machine learning system suggests that the price of a certain commodity is going to rise, people and companies might start hoarding that commodity, leading to a shortage and potentially resulting in a crisis. Machine learning system designers need to be aware of how their system operates, the current conditions of the real world, and tune their systems to make better decisions.

Focus on the Machine Learning System

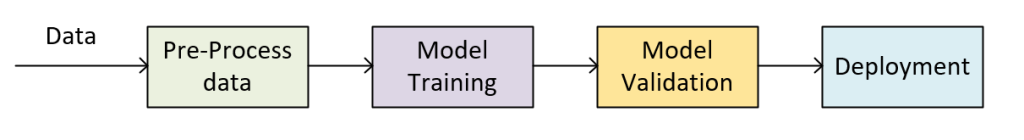

For now, we will focus on the Machine Learning system, and group “Physical World,” “Data Acquisition,” and “Data Cleaning & Structuring” into one block: “Pre-Processing.” The modified machine learning pipeline is given as:

These sub-blocks are:

- Pre-Processing: This step involves cleaning the data by removing outliers, imputing missing values, reducing dimensions, and performing feature learning and engineering. Pre-processing is a crucial and extensive research area. The output of this block is also referred to as “processed data“.

Example: ChatGPT data scrapping from internet, now a lot of content on the internet is non-sense. The edge ChatGPT have on other LLM based model like Google Gemini is that OpenAI invested a lot on Pre-processing of data.

Sometimes, this block also contains an optional block “Feature selection” or “Feature learning” which performs feature extraction. - Model Training: Depending on the data and problem, this component trains the machine learning model using some kind of machine learning algorithm tailored to specific applications/problems and data types. Data from the pre-processing stage is used for training.

- Model Validation: Model validation is a crucial step in the machine learning process. Typically, data is split into training and testing sets. The training data is used to train the model, while the testing data is used to evaluate its performance. This process ensures that the model generalizes well to new, unseen data.

If the model’s performance on the testing data is inadequate, the machine learning engineer may need to retune the model or check the training data for issues, and as well as ensure that there is no contamination from testing data into training data. This iterative process helps improve the model’s accuracy and reliability. - Deployment: Once the machine learning model passes the testing stage, it is deployed in real-world applications, example include: ChatGPT, Co-pilot, Google Gemini, Google Maps, and so many more AI based applications.